TWH – Many problems between Facebook and its users seem to occur in four basic areas. While none of the issues is unique to the Pagans, they do impact our community in various ways.

First, Facebook’s explanation of the enforcement of its Community Standards violation process is not very user-friendly. Second, any expectations of privacy on Facebook are seriously in question. Third, no easy resolution exists regarding the conflicts between the ideology of free speech and the problems of hate speech.

Fourth and final, Facebook claims to be a community space for information exchange. Yet at the same time, it makes its huge profits from selling advertising and user data. Its highly profitable business model conflicts with its highly seductive public image of a community information exchange.

In assessing all of these areas, the sheer volume of active users and the number of posts have to be taken into consideration. Additionally, the number of monthly active users vs the number of human moderators is a major factor in how Facebook manages and polices posts.

Facebook reported an estimated 2.5 billion monthly active users worldwide for the last quarter of 2019. By comparison, in 2012 the number of monthly active users reached 1 billion, effectively more than doubling its number of users and making it the largest social media platform.

To put this in perspective, the entire worldwide population is 7.8 billion. This means that roughly one-third of the entire population of the world uses Facebook.

In the U.S., that percentage is even higher–183 million monthly active users out of a population of 329.45 million. The estimated number of comments on Facebook within a month is 5 billion which averages out to roughly 1.66 million comments a day. The number of photos uploaded to Facebook per day is approximately 300 million.

In contrast to the user numbers and posts, Facebook reports employing a total of 44,942 people as of December 31, 2019.

It’s difficult to extrapolate from the overall numbers how many Pagans are active users or how many might actually be employed by Facebook. The combined number of Pagans in Western nations is estimated at over 1 million, but it is hard to say how accurate that figure is.

Is Facebook’s violation and discipline process user-friendly?

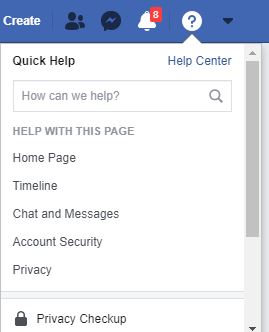

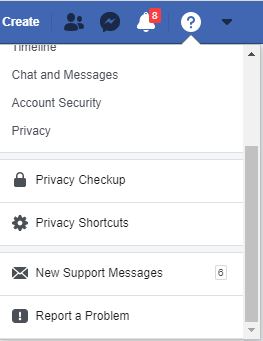

If a user wants to read Facebook’s Community Standards, they would have to go to the “Quick Help” button on the ribbon at the top of the “Newsfeed” page. Then they would have to select “Support Inbox.” To the bottom right, is a link to its Community Standards. It could have its own button on the ribbon

A quick inspection of Facebook’s “Newsfeed” page failed to find any readily identifiable links to how Facebook enforces its Community Standards.

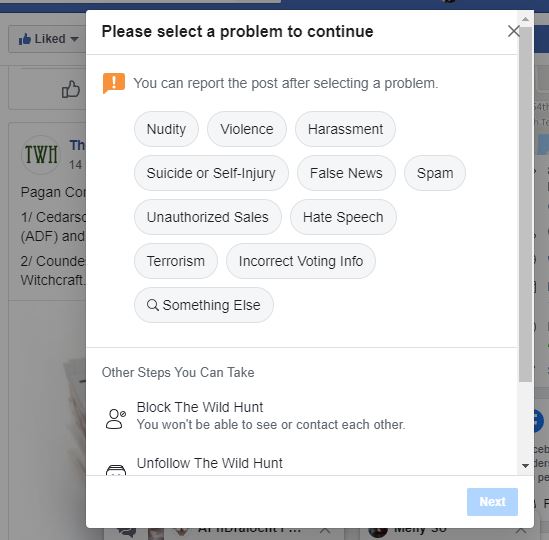

If a Facebook user finds a post to be problematic, they have two options. (Note: examples shown are how they appear on a desktop computer, not a mobile device.) The first option for reporting individual posts requires clicking on the three dots in the upper right-hand corner of any post which provides a drop-down menu. When you select the option to “Find Support or Report Post” another pop-up window opens with more options.

Another option involves going to the “Quick Help” button on the toolbar ribbon on the Newsfeed page. This option, which is not even visible on the first click, requires scrolling all the way down to the last item to select “Report a Problem,” which then links to a page “How to Report Things on Facebook”

Should Facebook users have an expectation of privacy on Facebook?

According to Facebook’s own attorneys, “There is no invasion of privacy at all, because there is no privacy.” This was said by Facebook counsel Orin Snyder in May of 2019 during a pretrial hearing to dismiss a lawsuit stemming from the Cambridge Analytica scandal, as reported by the site Law 360.

That class-action lawsuit is still in progress and is unlikely to be settled anytime soon. The schedule currently extends to late October 2021.

There are numerous examples of how Facebook’s reporting and policies can be manipulated to flag and remove posts and accounts. TWH has previously reported on such incidents.

Facebook claims its Community Standards will make users feel safe. Instead, some Facebook users have figured out how to manipulate this opaque process. Harassment can ensue.

How posts can be shared depends entirely on how a user’s individual privacy settings are configured, as well as settings for individual posts and for pages. In light of the information from the Cambridge Analytics scandal, no matter how tightly Facebook users have their pages locked down they probably should not have much expectation of privacy on Facebook. Privacy on Facebook may be more like that of a crowded coffeehouse than that of someone’s living room. After all, it’s social media, not private media.

Conflicts between the ideology of Free Speech and hate speech

Facebook defines “hate speech” as a direct attack on individuals based on their group identity linked to certain protected characteristics. Facebook identifies those characteristics as being “race, ethnicity, national origin, religious affiliation, sexual orientation, caste, sex, gender, gender identity, and serious disease or disability.”

To its credit, Facebook posted an article,” Hard Questions: Who Should Decide What Is Hate Speech in an Online Global Community?” In that article, Facebook has admitted that people have difficulty defining “hate speech” in a global context. The article stresses that hate speech depends upon context and intent.

TWH’s own columnist, Storm Faerywolf had their account suspended for 24 hours over a somewhat snarky Solstice meme. Whether it was the use of profanity when directed towards a specific religion or that someone saw it, reported it, and encouraged others to report it also, we do not know.

Marginalized people frequently vent their frustration and exasperation at those groups that they feel have marginalized them. Marginalized people joke at the expense of those groups that they hold responsible. Facebook sometimes labels these types of speech as “hate speech” as evidenced by Faerywolf’s post.

More nuanced than binary, hate speech has blurry boundaries. Hate speech does not lend itself to algorithmic identification. Humans disagree as to its boundaries. Universal agreement on what constitutes hate speech does not yet exist. And it may never.

Since Facebook largely relies on algorithms to screen the hundreds of millions of posts made every day, it is inevitable that some posts and accounts will get flagged without real cause. Just the disparity in numbers between users, posts, and Facebook employees makes it a certainty that a fair amount of reported posts that result in accounts being temporarily suspended and require human intervention or review are likely to slip through the cracks.

Is Facebook a community information exchange or a platform to sell data and advertising?

Facebook markets itself a friendly open community with a free exchange of information. It claims its Community Standards will make its users feel safe. Facebook, however, makes huge profits selling data and advertising, which in reality makes the user the commodity. A cynic might consider that Facebook has a different motivation than user feelings of safety. It may have a greater fear that controversy could drive advertisers away.

Facebook’s algorithms determine which posts you see, and also estimate the probability that the viewer will find the result engaging. Facebook’s goal is to keep its users engaged and online for as long as possible. This engagement creates a target audience for its advertisers, truly making its users the actual product.

On January 22, the Global Alliance for Responsible Media (GARM) presented its plan to ensure digital safety to the World Economic Forum at Davos. The economic elite from around the world gathers at that forum. GARM wants to block advertisers from funding harmful content. GARM reported that in three months in 2019, YouTube, Facebook, and Instagram removed 620 million pieces of harmful content. They caught most of that harmful content before anyone saw it. In that same period, 9.2 million pieces of harmful content still became available for viewing. According to GARM, it and the World Economic Forum seek to improve the “safety of digital environments.” This suggests that corporate monitoring of online content will increase.

Facebook is the behemoth of social media, and as such continually has the potential for setting the standards of similar platforms. For one large company to have that much control may not bode well for marginalized groups, like Pagans.

However, the issues with Facebook are not dichotomous. It is possible for any social media site to be both a space for community sharing, a source of data-mining as well as advertising. Ultimately, users have to decide individually whether the benefits outweigh the problems.

The Wild Hunt is not responsible for links to external content.

To join a conversation on this post:

Visit our The Wild Hunt subreddit! Point your favorite browser to https://www.reddit.com/r/The_Wild_Hunt_News/, then click “JOIN”. Make sure to click the bell, too, to be notified of new articles posted to our subreddit.